Parsero is a free and open-source tool available on GitHub. Parsero is used to reading Robots.txt files of websites and web apps. This tool can be used to get information about our target(domain). We can target any domain using Parsero. It has an interactive console that provides a number of helpful features, such as command completion and contextual help. This tool is written in python, so you must have python installed in your Kali Linux to use this tool.

It is a python script that is used to read Robots.txt file on a live web server in the disallowed entries. When the tool starts working, these disallowed entries tell the search engines what HTML files or static directories must or must not be indexed. Google spiders and crawlers play important roles in indexing a website according to the number of visitors. It is the responsibility of the administrator that they don’t share private and sensitive information with the search engines. But sometimes these developers mistakenly allow search engines to access Robots.txt file. Parsero helps in such situations by checking whether the Robots.txt file is visible or not.

Installation

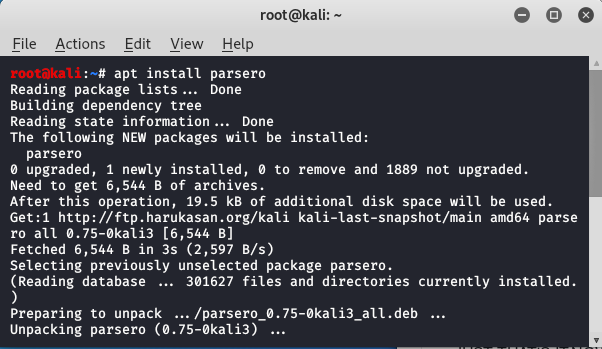

Step 1: Use the following command to install the Parsero tool.

apt install parsero

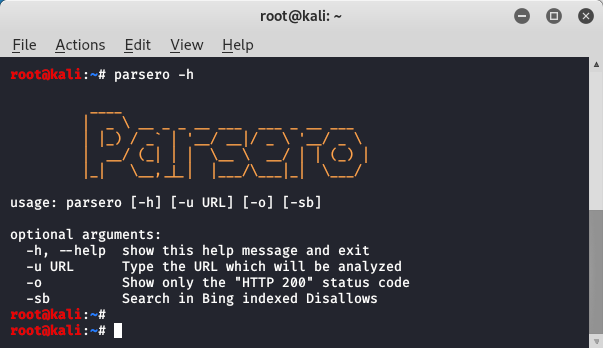

Step 2: The tool has been downloaded and installed successfully. Now, run the tool using the following command.

parsero -h

The tool is running successfully. Now we will see an example to use the tool.

Usage

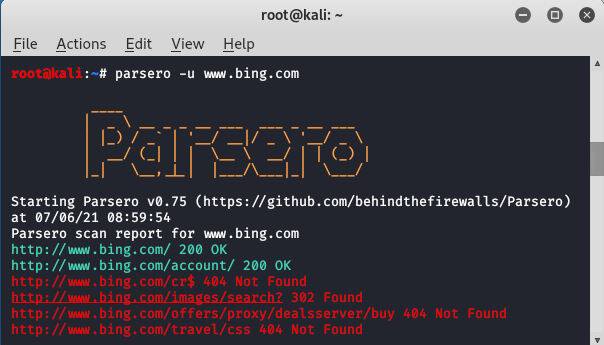

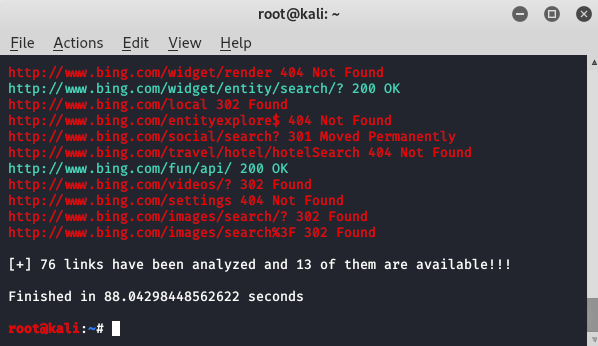

Example: Use the parsero tool to scan any website.

parsero -u <domain>

This is how you can search for your target. This tool is very useful for security researchers in the initial phases of Pentesting. You can target any domain of your choice.

Hlo guys wellcome to our community its non profit community made for hackers

and we are helping people to know scam and fraud and developing new things of hacking to get up in digital world 🌎 so let’s start 🎖

Hlo guys wellcome to our community its non profit community made for hackers

and we are helping people to know scam and fraud and developing new things of hacking to get up in digital world 🌎 so let’s start 🎖